Here’s a short recap of Part 1 (or you can read it here): businesses are doing all sorts of scanning of documents, performing sophisticated data extraction and are actively trying to manage data quality, mostly at a page-level where users view entire documents to review extracted data fields and either verify the data or correct it. The problem? How do you improve efficiency of this validation process and more importantly, how can you secure sensitive information when validation shows the entire document?

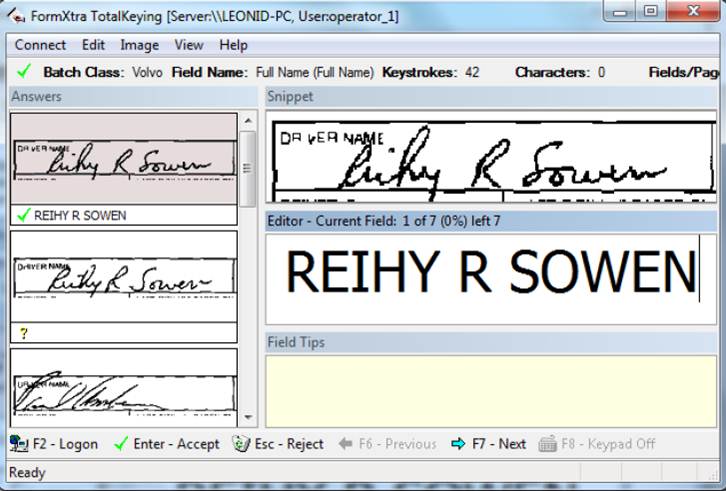

The answer is through a concept we call transactional data validation. This approach uses field-level validation and improves both efficiency and security by distributing validation workflows of only snippets of fields. Instead of showing the entire page (or showing the page and zooming to the appropriate fields), validation staff only see the single field. Even more beneficial is that staff can be assigned to review only specific fields. And the ability to look ahead to the next field further improves speed. So for financial data, a set of staff will only validate addresses, while another set of staff will review social security numbers, and yet another set will review account numbers. This level of specificity significantly improves efficiencies with review and correction of data by allowing staff to focus on the same types of data which tunes a persons ability to evaluate data accuracy.

The answer is through a concept we call transactional data validation. This approach uses field-level validation and improves both efficiency and security by distributing validation workflows of only snippets of fields. Instead of showing the entire page (or showing the page and zooming to the appropriate fields), validation staff only see the single field. Even more beneficial is that staff can be assigned to review only specific fields. And the ability to look ahead to the next field further improves speed. So for financial data, a set of staff will only validate addresses, while another set of staff will review social security numbers, and yet another set will review account numbers. This level of specificity significantly improves efficiencies with review and correction of data by allowing staff to focus on the same types of data which tunes a persons ability to evaluate data accuracy.

And as weve alluded, separation of data review allows businesses to direct sensitive data to staff with higher access. Businesses can set-up validation workflows that separate data review by individual fields. So staff with more seniority, or in trusted positions, can access sensitive data while other staff review more basic data. This distribution of data validation also provides security through obscurity. This is the concept of abstracting a set of sensitive information such that no single person has access to the entire set of data; preventing anyone from assembling this data into a single profile that could end-up being used in identity theft or other malicious acts.

The ability to efficiently, securely, and accurately review extracted data is a very important aspect of capture and is one that should be top-of-mind for any manager overseeing document capture operations.

To learn the latest advancements in capture technology, download the white paper “Intelligent Document Recognition (IDR) Advanced Technology for Increased Productivity.”